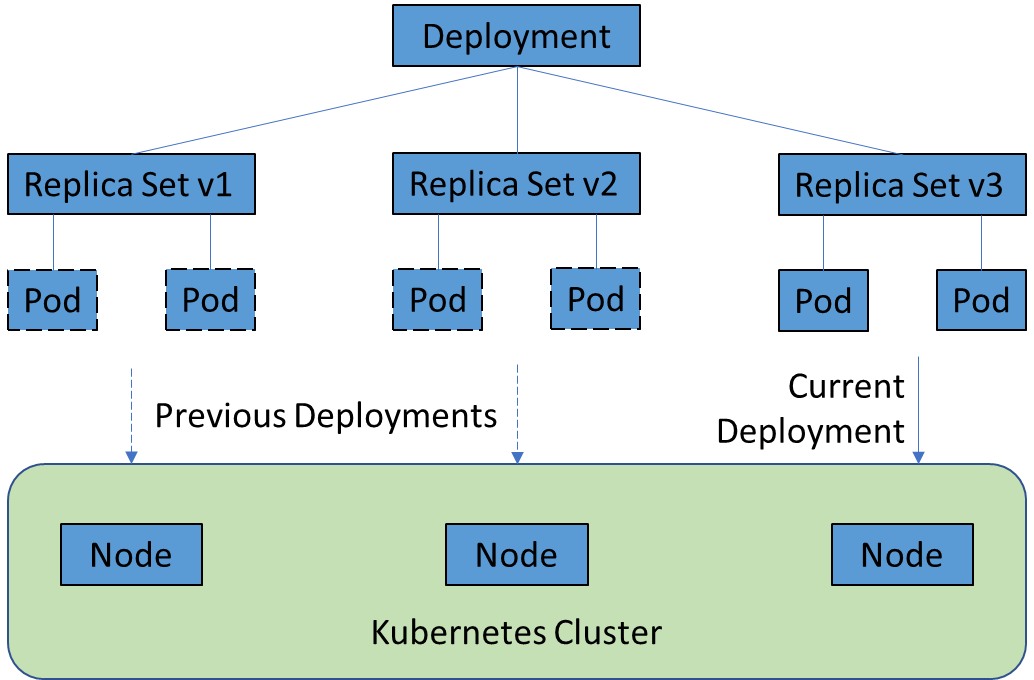

Kubernetes Deployments

The diagram above shows the relationship between Deployments, ReplicationSets, Pods and Nodes.

Every time a new version of the application is rolled-out using Kubernetes deployment, the deployment creates a new ReplicaSet which then creates the new set of Pods depending on the Pod Pod Replacement Strategy.

Replication Controller vs Replication Set

See section 4.3.1 of the book Kubernetes in Action for more details.

Replication Controller

Replication Controllers are less declarative than replication sets and are more imperative.

Supports selecting resources through equality Selector, e.g. env = production. This selects all resources (pods) with the label value equal to the production environment.

The

rolling-updatecommand is used for updating the replication controller.It can make use of template selectors, but is not mandatory. If it is not defined, it defaults to the mandatory ReplicationController’s label. So, it assumes that pods with labels in the same definition file will fall under its control.

Replication Set

This is a newer compared to ReplicationControllers.

More declarative than replication controller. It has more expressive pod selectors.

Requires the new set-based selector (mandatory field in the yaml defintion file) to understand what pods it should use. This allows it to be used in multiple environments. E.g.

environment in (production, qa)The

rolloutcommand is used to update the replication set.A replication set works best with deployments.

Essentially, the different comes down to using older rolling updates which can be created imperatively vs deployments defined through yaml or JSON files.

Node Affinity

Node Affinity (and anti-affinity) allows pods to have a soft preference or hard requirement or repulsion for nodes that the simple nodeSelector matching mechanism cannot provide. This is because node selectors do not support more complex Equality-based requirements selection criteria such as OR / AND.

The supported Node Affinity Types are:

Availability rule for scheduling new pods:

requiredDuringSchedulingIgnoredDuringExecution: hard requirement rule based on label selection.preferredDuringSchedulingIgnoredDuringExectuion: soft preference rule based on label selection.

Planned (to be supported in the future):

requiredDuringSchedulingRequiredDuringExection: pods will be evicted when the required label is removed

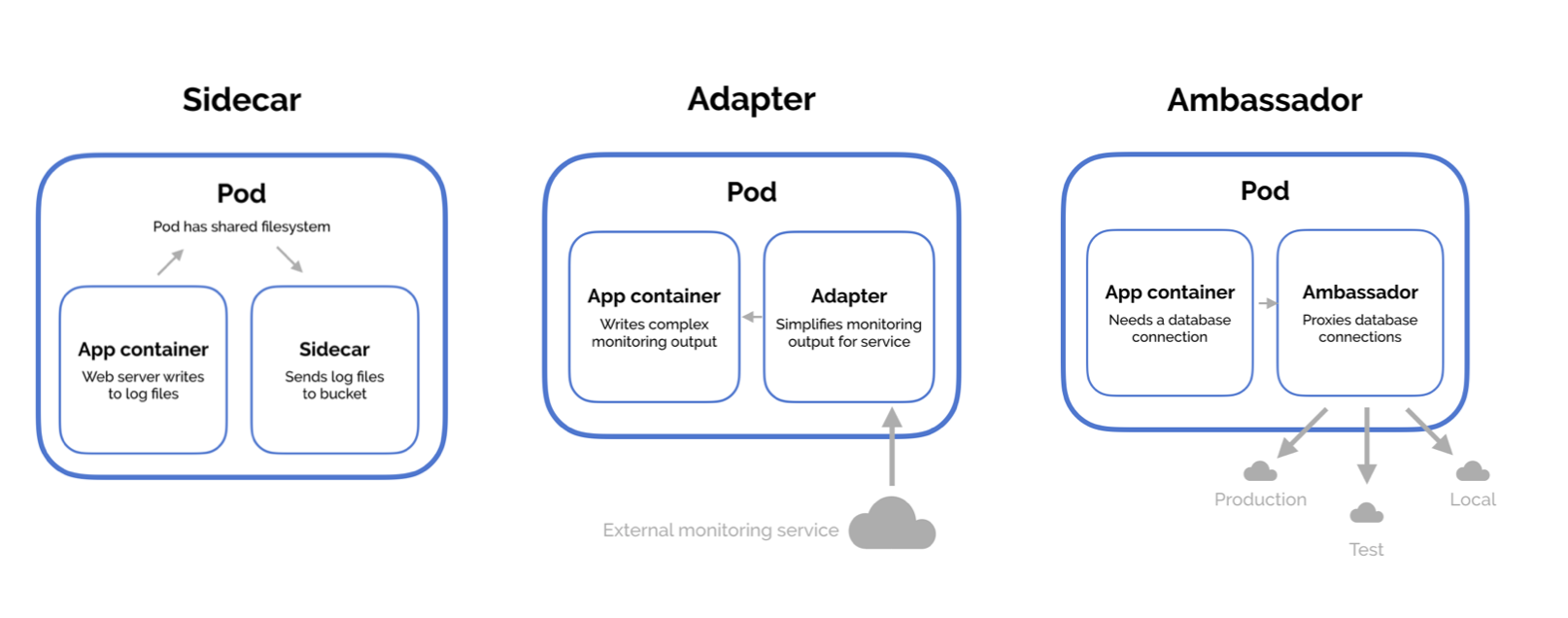

Multi Container Pods

We use multi-container pods when all these containers have the same life cycle, or when these containers need to be on the same node. A common use case for this is when you need a helper process (such as a logging agent) to be on the same node as the primary container.

The different pod design patterns available for multi-container pods are:

Sidecar: Consists of a main application and a helper application. The helper application is isolated so that even if it goes down, it does not affect the main application.

Adapter: Here, as the primary application is not able to fully satisfy a required outcome and changing the primary application is difficult, we use a secondary application to transform the output of the primary application; it becomes an adaptor application so that the output gets transformed into the desired output format.

Ambassador: Facilities the primary container to connect with the outside world. The secondary application behaves like a proxy that allows other containers to connect to a port on localhost while the ambassador container can proxy these connections. An example of this is access to a database locally for development and externally for testing and production. Or the ambassador could be sending requests to different shards of the database. The primary application itself focuses on its main business functions.

Taints & Tollerations

Taints are set on nodes while tollerations are set on pods.

Tainting a node is like marking it to only accept pods with a certain toleration. E.g., a node tainted with the key value pair

department=marketingwill only accept pods with the same key value pair toleration. It does not prevent a tolerant pod to be deployed on a node without any taints.Taints and Tollerations are a flexible way to steer pods away from nodes or to evict pods that shouldn’t be running on a node. Some use cases include:

Dedicated Nodes: Where a set of nodes are exclusively meant for a particular set of users. For this, you can add a taint to these nodes for the user group, e.g.

$ kubectl taint nodes <node_name>dedicated=groupName:NoSchedule.Special Hardware Nodes: E.g., where a subset of nodes have specialised hardware such as GPUs. We only want pods that require GPU hardware processing to run on these nodes.

The taint effect defines what would happen to the pod if they are not able to tolerate the taint. The different taint effects are:

NoSchedule: New pods will not be scheduled on the node. Exist pods already running will not be evicted.

PreferNoSchedule: Avoid placing a pod on this node, i.e. this is not strictly enforced.

NoExecute: Useful in runtime situations to evict pods from a tainted nodes and prevent non-tolerant pods from being scheduled to this node.